Vision Based Autonomous Vehicle for High-Vegetated, Off-Road Terrain

Off-Roading: Autonomous Style!

Driving off-road is a very different experience than driving in an urban setting. Any autonomous vehicle attempting this feat would need to use an accurate 3D reconstruction of both the near and medium-long range with a ground classifier to detect the difference between traversable ground and wooded areas or areas with crops or bushes.

Segmenting the Scene

Researchers at the University of Salento, Italy, in collaboration with the Institute of Intelligent Systems for Automation (ISSIA), National Research Council (CNR), Bari, Italy, has developed a multi-baseline stereo system for long range perception by an autonomous vehicle operating in agricultural environments. The system is able to segment the scene into ground and non-ground regions and uses a self-learning framework whereby the ground model is automatically learnt and continuously updated while travelling. The system has been implemented within the project Ambient Awareness for Autonomous Agricultural Vehicles (QUAD-AV) funded by the ERA-NET ICT-AGRI action. The team also consisted of Danish Technological Institute (DTI), Institut national de recherche en sciences et technologies pour l'environnement et l'agriculture (IRSTEA), Fraunhofer-IAIS, and CLAAS.

How It Works

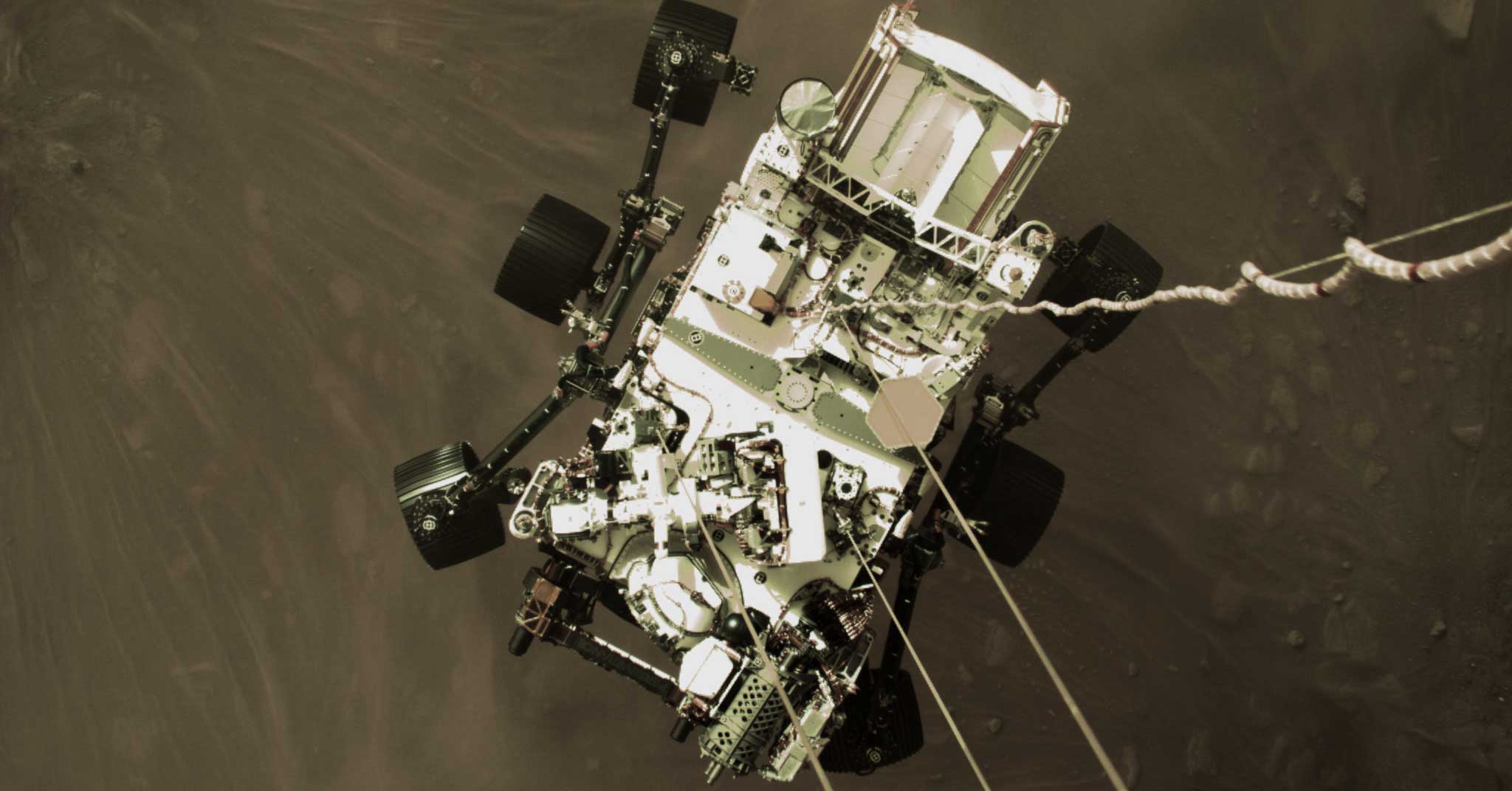

As shown in Figure 1 below, the system is composed of two trinocular heads; one featuring a short baseline system and the other one featuring a long baseline system. The short range camera uses a FLIR Bumblebee XB3 3-sensor stereo camera. The long range system is custom built using three FLIR Flea3 1.4 MP cameras. The three Flea3s are placed in line on an aluminum bar to form two baselines of 0.40 m, using the left and middle cameras, and 0.80 m, using the left and right cameras, respectively. By employing the narrow baseline to reconstruct nearby points and the wide baseline for more distant points, the system takes the advantage of the small minimum range of the narrow baseline while preserving the higher accuracy at longer ranges of the wide baseline configuration. In Figure 2 the stereo frame is mounted on top of an off-road vehicle made available by the QUAD-AV partner IRSTEA, during an experimental campaign in October 2012.

Figure 1 - The multi-baseline stereovision system

Figure 2 - Experimental test bed provided by IRSTEA for field validation in the QUAD-AV project

In Figure 3 the 3D point cloud returned by either trinocular camera is used as input data to a classifier that segments the scene into ground and non-ground regions.

Figure 3 - Classification results for two sample cases: (a, c) long range classification with Flea3 data; (b, d) short range classification with XB3 data

The Right Cameras

The short range system takes advantage of the easy-to-use, pre-calibrated and highly accurate Bumblebee system of stereo cameras. The Bumblebee XB3 comes with the Triclops Stereo SDK which provides access to all images stages of the stereo processing pipelines which allowed the researchers the flexibility to try different custom stereo approaches. For the long range system, the UofS’s researchers chose Flea3 cameras for their compact size, image quality, and flexible Flycapture SDK for creating custom applications.

What's Next?

Parts of this research were published on Sensors and parts will be presented at the 5th Annual IEEE International Conference on Technologies for Practical Robot Applications (TePRA) held in the Boston, Massachusetts this year.